|

Task-Independent Knowledge Makes for Transferable Representations for Generalized Zero-Shot Learning Chaoqun Wang, Xuejin Chen*, Shaobo Min, Xiaoyan Sun, Houqiang Li National Engineering Laboratory for Brain-inspired Intelligence Technology and Application University of Science and Technology of China The Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21) |

|

Abstract: |

|

Generalized Zero-Shot Learning (GZSL) targets recognizing new categories by learning transferable image representations. Existing methods find that, by aligning image representations with corresponding semantic labels, the semantic-aligned representations can be transferred to unseen categories. However, supervised by only seen category labels, the learned semantic knowledge is highly task-specific, which makes image representations biased towards seen categories. In this paper, we propose a novel Dual-Contrastive Embedding Network (DCEN) that simultaneously learns task-specific and task-independent knowledge via semantic alignment and instance discrimination. First, DCEN leverages task labels to cluster representations of the same semantic category by cross-modal contrastive learning and exploring semantic-visual complementarity. Besides task-specific knowledge, DCEN then introduces task-independent knowledge by attracting representations of different views of the same image and repelling representations of different images. Compared to high-level seen category supervision, this instance discrimination supervision encourages DCEN to capture low-level visual knowledge, which is less biased toward seen categories and alleviates the representation bias. Consequently, the task-specific and task-independent knowledge jointly make for transferable representations of DCEN, which obtains averaged 4.1% improvement on four public benchmarks. |

|

|

|

Motivation of this paper. (a) Existing methods focus on using task labels to learn semantic-aligned representations, which can be transferred to unseen categories. (b) Besides, this paper further learns task-independent knowledge via instance discrimination supervision, which significantly improves the representation transferability. |

|

Results: |

|

|

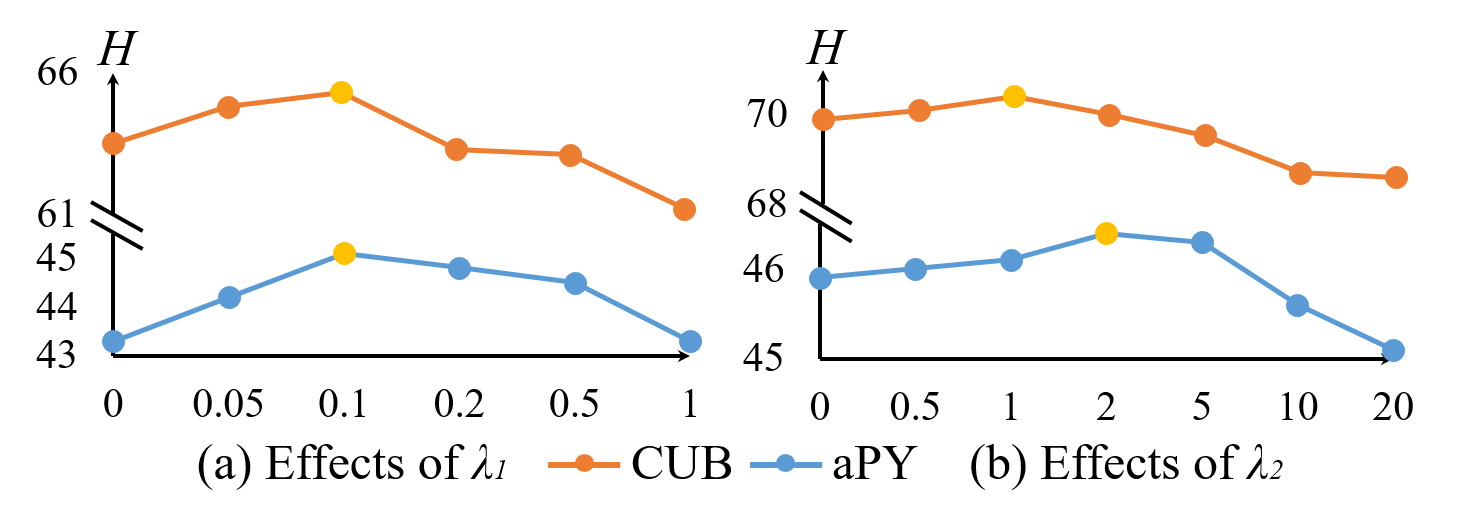

Effects of different $\lambda_1$ and $\lambda_2$ for $\mathcal{L}_{id}$ and $\mathcal{L}_{sp}$, respectively. |

|

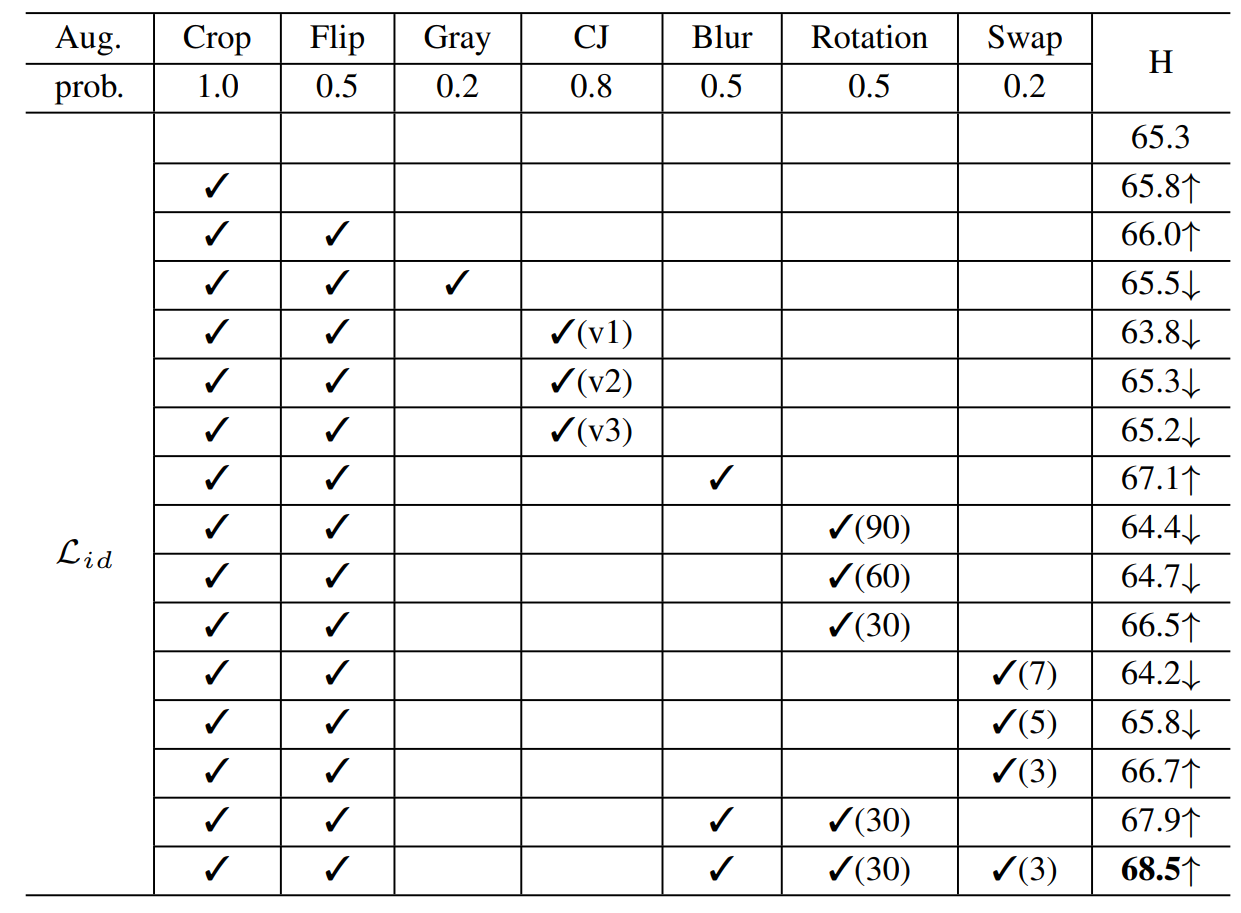

| Evaluating different visual augmentations on CUB by successively adding operations. When a certain operation brings positive effects, it is retained, otherwise, it is removed. |

|

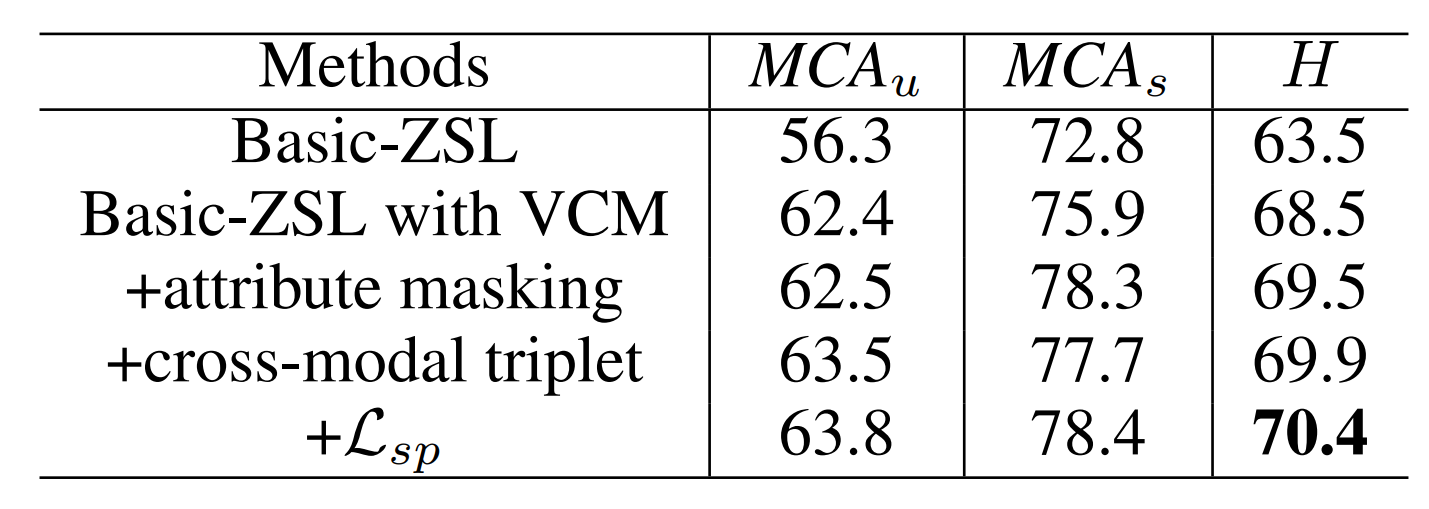

| Table 2: Effects of each component of DCEN on CUB. |

|

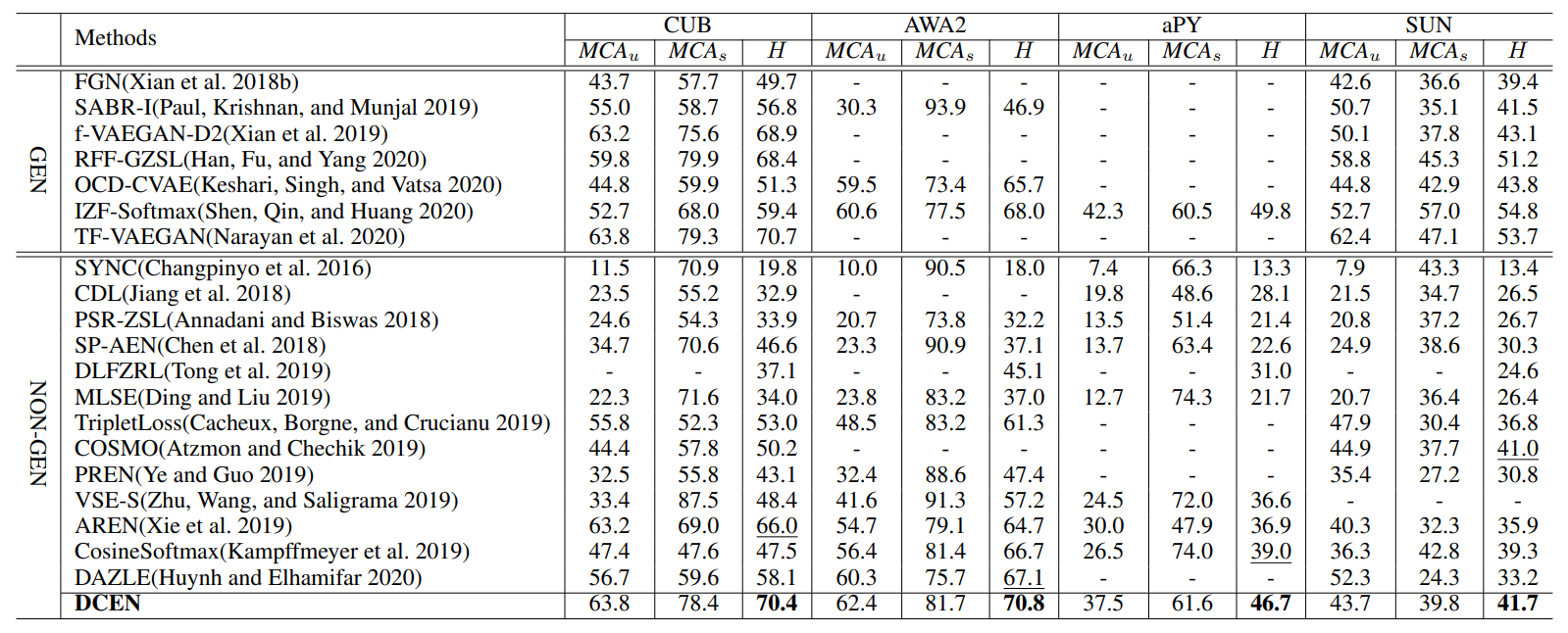

| Results of GZSL on four classification benchmarks. Generative methods (GEN) utilize extra synthetic unseen domain data for training. |

| Acknowledgements: |

|

This work was supported by the National Key R&D Program of China with grant No. 2020AAA0108602, National Natural Science Foundation of China (NSFC) under Grants 61632006 and 62076230, and Fundamental Research Funds for the Central Universities under Grants WK3490000003. |

| BibTex: |

| @article{Wang2021DCEN, author = {Chaoqun Wang and Xuejin Chen and Shaobo Min and Xiaoyan Sun and Houqiang Li}, title = {Task-Independent Knowledge Makes for Transferable Representations for Generalized Zero-Shot Learning}, booktitle={Thirty-Fifth {AAAI} Conference on Artificial Intelligence, {AAAI} 2021}, year = {2021} pages = {2710--2718} } |

| Downloads: |

| Disclaimer: The paper listed on this page is copyright-protected. By clicking on the paper link below, you confirm that you or your institution have the right to access the corresponding pdf file. |

|

|

| Copyright © 2021 USTC-VGG , USTC |