|

Semantics-Preserving Sketch Embedding for Face Generation Binxin Yang, Xuejin Chen*, Chaoqun Wang, Chi Zhang, Zihan Chen, Xiaoyan Sun National Engineering Laboratory for Brain-inspired Intelligence Technology and Application University of Science and Technology of China IEEE Transactions on Multimedia 2023 |

|

|

Photorealistic face images generated from sketches in a large variety of styles with precise semantic control by our system. Our method effectively distills semantics from rough sketches and conveys it accurately to the latent space for a pre-trained face generator. From various sketches in edge style with fine details (first row), concise contour style (second row), and freehand style with geometric distortion (third row), our approach generates high-quality face images in many appearance modes while well-preserving the stroke semantics for all facial parts and accessories. |

| Abstract: |

| With recent advances in image-to-image translation tasks, remarkable progress has been witnessed in generating face images from sketches. However, existing methods frequently fail to generate images with details that are semantically and geometrically consistent with the input sketch, especially when various decoration strokes are drawn. To address this issue, we introduce a novel W-W+ encoder architecture to take advantage of the high expressive power of W+ space and semantic controllability of W space. We introduce an explicit intermediate representation for sketch semantic embedding. With a semantic feature matching loss for effective semantic supervision, our sketch embedding precisely conveys the semantics in the input sketches to the synthesized images. Moreover, a novel sketch semantic interpretation approach is designed to automatically extract semantics from vectorized sketches. We conduct extensive experiments on both synthesized sketches and hand-drawn sketches, and the results demonstrate the superiority of our method over existing approaches on both semantics-preserving and generalization ability. |

|

|

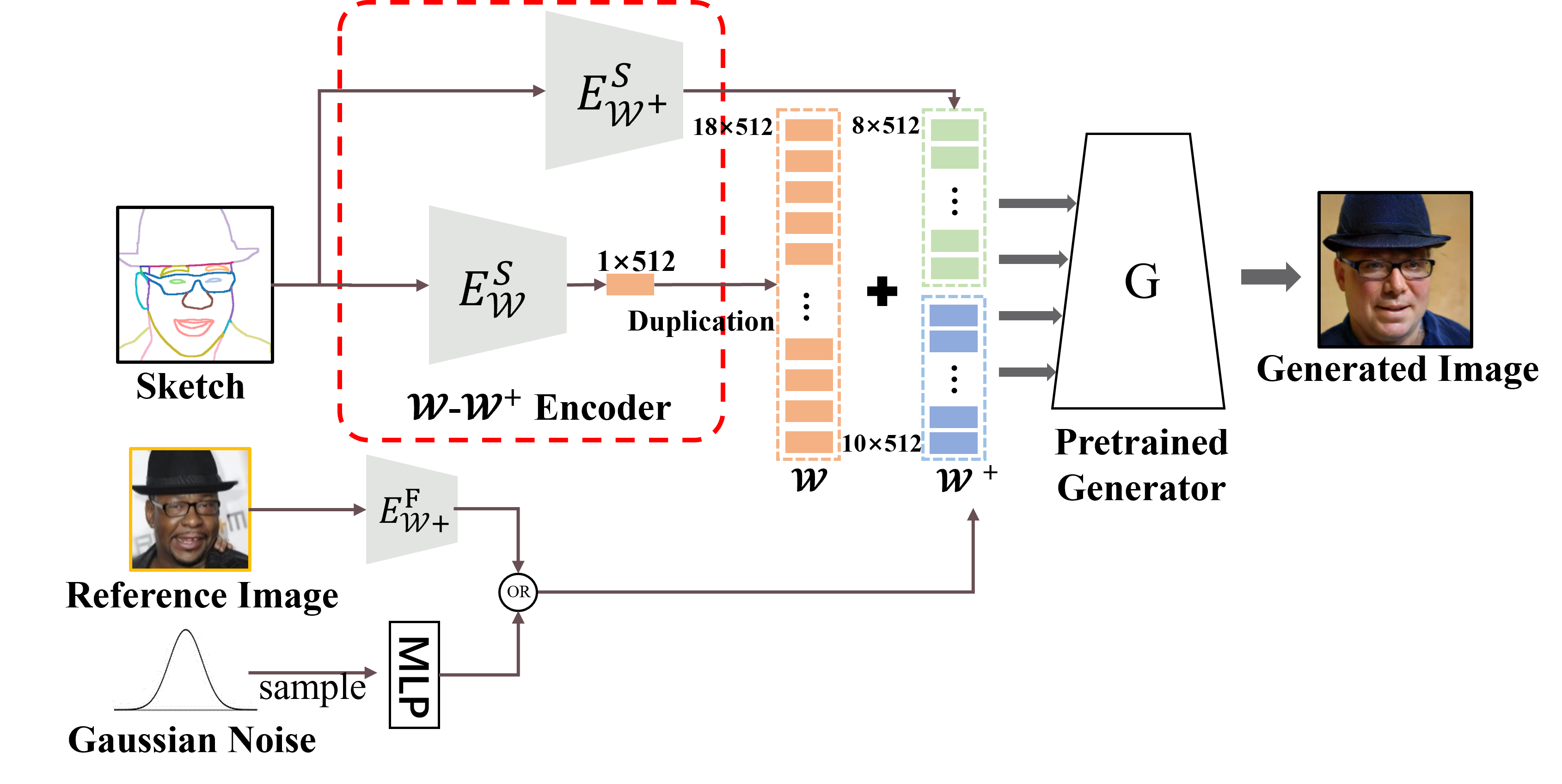

Our semantics-preserving sketch embedding for face synthesis. To generate the 18 style codes for the pretrained StyleGAN generator, our W-W+ Encoder comprises two encoders that map the input sketch with stroke labels to the W and W+ latent spaces respectively. The low DoF W space ensures precise semantic controllability, while the high DoF W+ space ensures high perceptual quality and low distortion of the synthesized images. The fine-level appearance attributes can be controlled from a reference image and a randomly sampled latent vector. |

|

Applications:  |

|

Results of local face editing. Despite the high abstract level of freehand sketches, our approach still supports precise local editing. Our method controls the geometric and semantic information of the generated face images through sketches without the interference of appearance. |

|

|

Appearance manipulation.

Our method effectively disentangles the influence of sketches and reference images on the synthesized face images. |

|

|

Sketch-based face generation with random appearance codes. Given the same sketch, our method can generate face images with diverse appearances from noise vectors. |

| Acknowledgements: |

| This work was supported in part by the National Key R&D Program of China under Grant No. 2020AAA0108600 and in part by the National Natural Science Foundation of China under Grants 62076230 and 62032006. |

| BibTex: |

| @article{Yang2023, author = {Binxin Yang and Xuejin Chen and Chaoqun Wang and Chi Zhang and Zihan Chen and Xiaoyan Sun}, title = {Semantics-Preserving Sketch Embedding for Face Generation}, journal={IEEE Transactions on Multimedia}, year = {2023} } |

| Downloads: |

| Disclaimer: The paper listed on this page is copyright-protected. By clicking on the paper link below, you confirm that you or your institution have the right to access the corresponding pdf file. |

|

|

| Copyright © 2023 USTC-VGG , USTC |