Learning Based Dexterous Manipulation (2024)

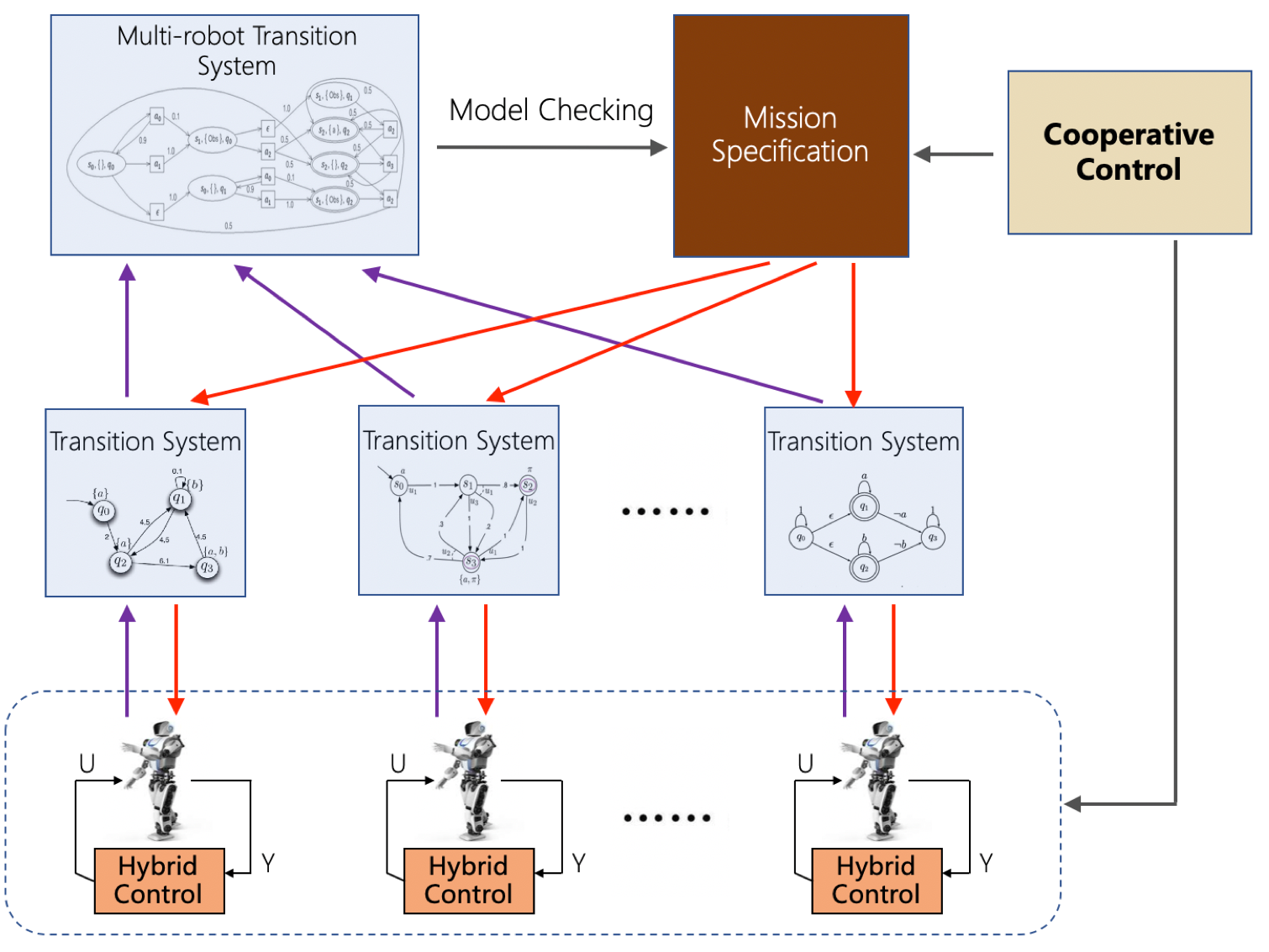

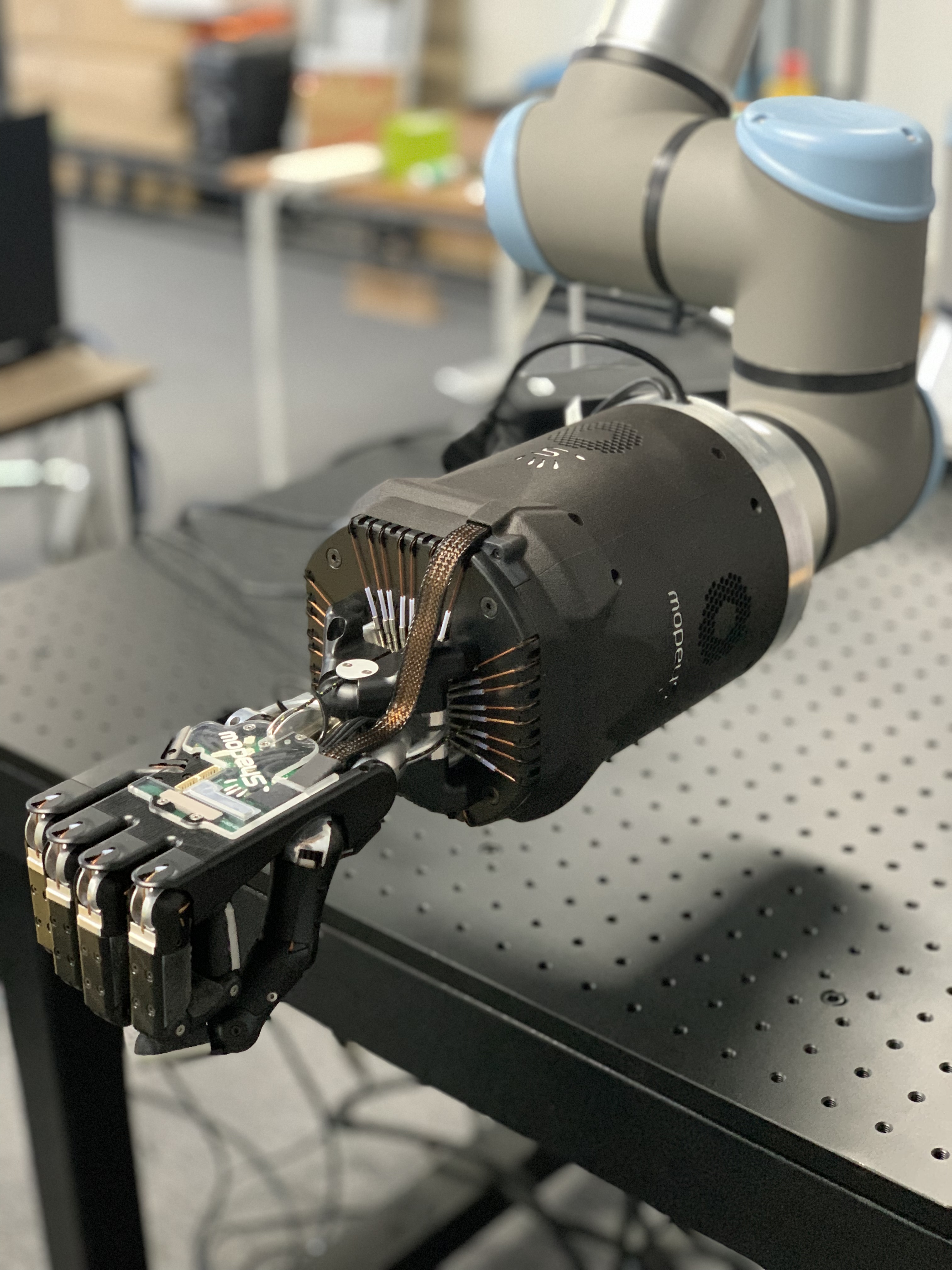

Reinforcement learning has presented great potential in learning policies for dexterous manipulations. While many existing works have focused on rigid objects, it remains an open problem to manipulate articulated objects and generalize across different categories. To address these challenges, we develop a novel framework that enhances diffusion policy using linear temporal logic (LTL) representations and affordance learning to improve the learning efficiency and generalizability of articulated dexterous manipulation.